when we are moving ever closer too the completion of the dataset, i was wondering if someone from HQ has tough about the possibility for the ai too help identify the cells by “face recognition technology” i have noticed a lot of the cells follow the same pattern so if you had lots of points/synapses with the same distance betwheen them on different cells it would probably be the same celltype. Beeing able to even group 10 identical cells togheter in average in each group like that would probably save thousands of hours of manually labour identifying the cells for both this and future projects.

Actually I was thinking about something like this. But only thinking, I’m not planning (currently) to do any addon for that. But you’re right, the cells of the same type are quite similar and should be easy (?) to recognize by an automated system. Or at least, recognize most of them and leave only the harder ones to the players.

Alternatively, when we finish fixing the cells, we could group them by main types (T, Tm + TmY, C, Y, L, itd.). Then recognizing each subtype would be easier, if one person would work with only one type. But still, automatic recognition would be much faster.

That is the way i am doing it currently since that goes much faster than finding the the correct subtype other than a few that are mostly easy to spot like c2/c3 or most T types

This is a great question - we have a similar tool for neurons of the central brain. It recommends a label based on previous labels. Let me ask about deploying it for optic lobe.

@annkri @Krzysztof_Kruk is this the most up to date list of labels you guys have been using?

Centrifugal; C

Distal medulla; Dm

Lamina intrinsic; Lai

Lamina monopolar; L

Lamina wide field; Lawf

Lobula columnar; Lc

Lobula-complex columnar; Lccn

Lobula intrinsic; Li

Lobula plate intrinsic; Lpi

Lobula tangential; Lt

Medulla intrinsic; Mi

Medulla tangential; Mt

Optic lobe tangential; Olt

Proximal medulla; Pm

Retinula axon; R

T

Translobula; Tl

Translobula-plate; Tlp

Transmedullary; Tm

Transmedullary Y; TmY

Y

Yes, I believe, it is.

Ok great we are looking into this!

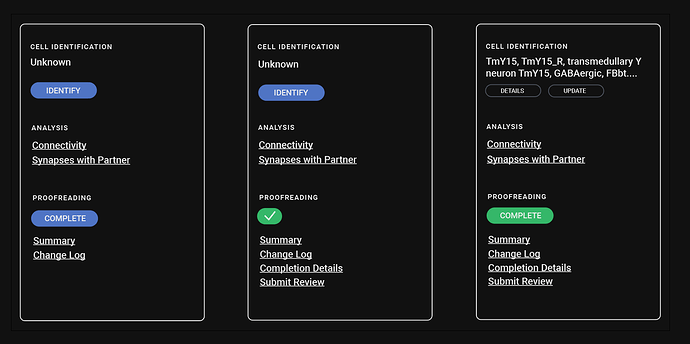

Would it be beneficial if it was easier to see whether a cell already had a label within FW? Any other changes that would improve labeling? I was thinking it might be nice if there was a way to sort through completed, unlabeled cells by primary neuropil. At least that would make it easier to find cells that need labels

Yes, it would definitely help, if the info, if the cell is already labeled, was visible directly in FW. It would be even more useful, if the label itself was directly visible. It would help finding misidentified cells and fixing them.

@Amy would definitely be very helpful beeing able to see if there is a label in FW, and beeing able to see what that label is directly in FW would be useful.

Beeing able to sort trough completed, unlabeled cells etc would probably be useful, when we get the dataset completed enogh that the primary consern is labeling old cells instead of trasing new ones. The problem i think is that there is 100 different way to label one cell type and we need to have a consistent way to label each cell to make it searchable (the list made by kk help a lot in this) but still not every entry will have the same details like tm instead of tm2 and they would need to be gone trough another time to get the exact label, and having the ai help suggest names would probably help here, but is there any way the ai could go trough every cell in the neuropil and suggest batches without us having to manually open every cell?

Opening batches with say 10-50 cells (not sure how many cells you could have without it beeing too much) and looking for/ removing/ marking any that are different from the main type before naming the whole batch, would be much easier than opening one at a time.

Hi Krzysztof, I’m attempting to reproduce this trick about changing the resolution through the “Choose image server” dropdown as you describe here. I was able to do this the other week but now I feel like I’m looking in the wrong place. Would you be able to explain this more in depth or confirm for me that the feature seems to have disappeared?

Hi,

You can change the resolution on any image server. Just go the the right panel (make sure, you’re in the segmentation with graph tab) and in the Rendering tab find “Resolution (slice)” thing. Move over it to select the target resolution (e.g. 5px) and click on that bar.

Huh, interestingly I still don’t feel like this is where this info was located when I modified things last week. I could have sworn there were fields to directly input 5 px and then set something to 19/19. I’ve done what you describe but there’s no fields, just that slider, and it’s set to 5 px 27/27. How did you make it 5 px 19/19?

The numbers after the px value don’t matter. It’s just the number of chunks already loaded and number of all chunks to be loaded. E.g. 19/19 means, that the currently visible segments consist of 19 chunks and all that chunks have already been loaded.

I’ve forgot to mention in the previous post, but in the “segmentation_with_graph” tab you can set only the resolution of the cross-sections of the visible segments. To set the resolution of the slices (2D images), you have to switch to the “Image” tab (usually the first one at the top) and change the “Resolution (slice)” in there (Rendering tab on the right side). For example, to 5px (again, the numbers after that are not related to the resolution).

Great, okay. So the fractions in the original post were basically there erroneously? ![]() Thank you!

Thank you!

Yes. At that time, I didn’t know, what the numbers were, so I thought, they are related to the resolution, because they are at the same place as the res.

I think, it would be practical, if the multicut points were automatically deleted after a successful cut. I don’t think, they are useful at all after the cut, because they are attached to the old segment (the one before splitting), so can’t be reused for additional cuts anyway.

Hey, thanks for bringing this up and sorry I missed this notification. I designed a new lightbulb menu that was going into production to both make it easier to add annotations and to display them in FlyWire, but we hit some hiccups with lack of confidence in labels (if we display directly within flywire they need to be accurate and not all of them are, some are long, long story…anyway wasn’t able to add it)

Hopefully in the future we can generate some links/spreadsheets of broad classes that can later be used to drill down specifics, like you mentioned in thread earlier today.

i am unable to open any of the link from here Sign in - Google Accounts

it just open a new tab with no dataset or image server chosen and no 2d or 3d, not helping choosing any of the image servers.

Hi annkri,

Uh oh, yes these do seem to be broken. I’ll report this to the devs. Thank you for reporting!

Update: The red annotated coordinate is improperly scaled to FlyWire which is causing the bug. In the meantime, if you zoom out on 2D, eventually the dataset shows. Then right click around on the dataset and your cell will show in 3D. It will look like this: FlyWire (note the red annoated point is far away from the actual 3D mesh)